But many organisations are finding out the hard way how much storing long-term data in the cloud can cost unless they are very careful about archiving what they do not need. At the other end, battery life may demand a rethink of how sensors talk to other systems.

At the endpoints, transmitting data is often the largest single demand on the battery. Minimising traffic across the network is likely to be vital for what some researchers see as the future of device design, where devices interact with each for contextual intelligence.

To some extent, many sensor nodes already minimise unnecessary data transmissions. If a sensor does not even wake up until there is a significant change to report, this inevitably reduces power consumption. Compressive sensing pushes the idea even further by moving more advanced processing into the front-end devices to the degree that they convert only a fraction of readings into actual samples.

This does not work in all cases. The information you need from the sensor must be pretty sparse. In effect, using regular sampling strategies just means you are capturing and storing a lot of useless noise. It did not take long after the theory was developed for the technique to take root in medical magnetic resonance imaging (MRI). Instrument designers were able to slash not just amount of data a machine need to record but how long a patient must lie immobile inside one, which is particularly important for diagnosing problems in infants.

Several things have stood in the way of compressive sensing for applications beyond medical imaging, though it is relatively simple to implement in front-end devices. A curious feature spotted by pioneering work in the mid-2000s is how effective randomness is in crunching raw data down into a much more compact vector. Encoding can consist simply of multiplying vectors from the source data with a randomly populated matrix. Randomness works because, in the ideal case, the contents of the matrix do not correlate with the signal. Random values tend to work well though you might need to be careful about the distribution of the numbers.

One further advantage for IoT applications is that this type of compression obfuscates the data and deliver some additional security without demanding full encryption. And you can trade off different properties here. Because single-bit errors in encrypted data will lead to a high degree of corruption, one option that Qi Zhang and colleagues at Aarhus University have explored is to combine compressive sensing with customised encryption that can tolerate communications errors and dropouts.

Despite these apparent advantages, there are serious caveats with regular compressive sensing. The most important concern is that you need to be sure the signal of interest is very sparse indeed. A small increase to 5% or more of a stream sampled at the Nyquist frequency will probably cause a dramatic increase in the amount of data that will be needed to capture the data well. Interfering signals will also make the job of separation a lot harder when it comes to reconstruction. And that reconstruction process is computationally intensive.

These issues have led to traditional compressive sensing being isolated to applications like MRI because the computationally intensive part can easily be pushed onto high-power hardware over a network connection. But with IoT devices, a lot will depend on how much processing needs to be done locally and what you can offload to elsewhere in the network. That may lead to more data being transmitted wirelessly than is ideal to deal with reconstruction.

Machine learning may bring new a lease of life to the concept by making reconstruction easier. The first steps involved a process known as deep unfolding, which uses the same kind of training techniques as those used for neural networks to find the parameters needed for signal reconstruction. Deep unfolding can be more efficient than the traditional iterative reconstruction techniques. But such reconstruction might not even be needed.

Compressive learning

Almost 15 years ago, a team at Princeton University proposed “compressive learning”, skipping reconstruction entirely to learn patterns directly from the compressed data stream. At the University of Ljubljana, Alina Machidon and Veljko Pejovic have been working on ways to use this to reduce overall energy consumption in wearables and similar designs. In this environment, it is not easy to come up with a fixed measurement matrix and sampling strategy that captures all the possible signal types.

Their experiments showed that a machine-learning model could gauge whether a wearer is standing or not using a sampling rate of just 10% of the typical rate used by a sensor hub containing accelerometers and gyroscopes. Other conditions were far more variable and, perhaps unexpectedly, sitting is the hardest to distinguish, requiring up to 100% of the hardware’s sampling speed. The pair came up with an adaptive approach that used a neural network’s confidence in its output, based on the probability it assigned to its prediction, to determine whether to increase the sampling rate to try to get a better prediction.

The adaptive technique proved to be effective by restricting the amount of energy consumed during sampling: using just under half the energy of a full-rate system for the same model accuracy. One issue with the variable-sparsity approach is that AI models do not typically work well with changing sample rate. The workaround was to split the model into two halves, with one manipulating the data from the sensor’s different rates feeding a single interpretation model. One area that needs further optimisation is energy consumption at lower rates, as relative efficiency tends to decline at lower rates.

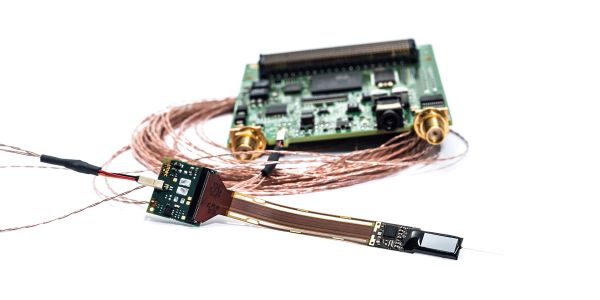

Above: A neural interface from imec which helps to move more processing into the front-end leading to a denser and less power-hungry device

Because digitisation, along with RF communication, one of the most energy intensive operations a simple sensor node can perform, one possible way to bring the consumption down further is to move some of the signal interpretation closer to the source. Eiman Kanjo, visiting professor in pervasive sensing at Imperial College, London, and her colleagues refer to this as “prosensing”: where much of the signal analysis take place in the analogue domain before any digitisation. Kanjo and colleagues at the University of Edinburgh started an EPSRC project early this year to develop a sensor processor that uses an analogue content-addressable memory built using resistive RAM cells to handle feature extraction and classification.

More conventional techniques but, again, based on an analysis of signal dynamics provide ways to handle processing closer to the source. For a low-power array of implantable neuron sensors, Imec researchers needed a way of capturing not just the sudden spikes in voltage biological neurons produce when they fire fully but “poor” spikes that are ten-times smaller. The technique used by the team was to use a variant of a technique used in low-bitrate telecom channels where each recorded sample captures the change in intensity compared to its predecessor. The big difference being the circuit only fires if the change exceeds a threshold. The result was a hundred-fold reduction in power compared to a traditional wired brain interface, with each bit taking 7.5pJ to send to a wearable hub.

The continuing issue that compressive sensing has lies in the need to understand at a fine level how signals behave compared to the relative ease of using regular Nyquist sampling. And the trade-offs involved with reconstruction or AI inference might not work in its favour. But when battery energy is scarce, the investment in dealing with those trade-offs may well be worth it.