Embedded device designers and manufacturers can feel a tidal wave of change washing over the industry. Artificial intelligence (AI) and machine learning (ML) technologies promise to turn product design upside down, providing new opportunities to transform the value of embedded products.

But the evolution in applications running at the edge poses severe challenges for the current generation of microcontrollers and the future of MCUs for applications at the edge belongs to manufacturers which can master the challenge of providing high AI/ML performance with ultra-low power consumption, while enabling designers to work in familiar development and operating environments.

The scale of the demand for edge processing capability can be hard to appreciate. We are in the age of massive IoT adoption: independent forecasts suggest that there will be in the region of 30 billion connected endpoint devices by 2030.

This growth in devices will be mirrored by the volume of data traffic carried over the internet, which the International Telecommunications Union (ITU) said reached more than 5 zettabytes in 2022, and the volume is growing fast. Much of the future growth will be associated with AI operations performed in the cloud.

For devices operating at the edge, however, data transfer to and from the cloud has a real cost: financially in many cases, but equally in power, latency, and reliability. Broad AI/ML adoption at the edge will not succeed unless endpoint devices are able to perform sophisticated AI operations locally meaning that devices are able to optimise data exchanges with the cloud, performing as many AI operations locally as possible and only transferring data to the cloud when ultra-high processing capability is required.

Endpoint intelligence is being driven by AI/ML that is enabling OEMs to conceive new and more valuable types of devices, from electromyographic (EMG) wristbands that can convert the wearer’s thoughts into type, to smart glasses with true augmented reality functions.

Traditional MCU manufacturers are racing to catch up with the surge in demand for AI capability and OEMs that produce IoT endpoints and edge devices have been struggling to make meaningful advances in ML functionality, hampered by the constraints of power, cost and determinism to which MCU applications are subject. A new generation of MCUs is required which can perform ultra-low power AI functions fast and efficiently.

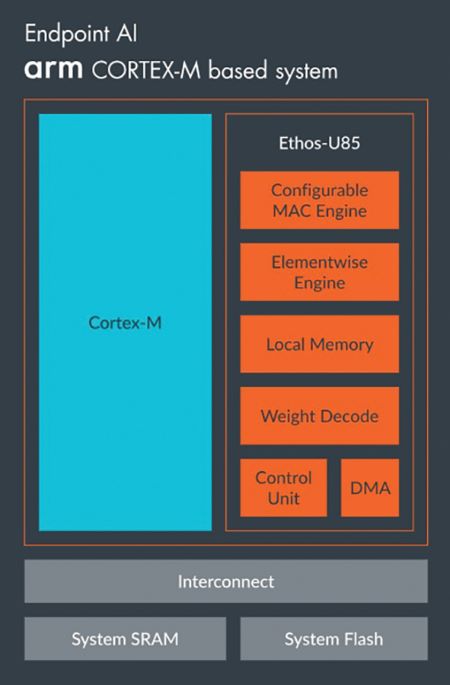

Above: The Arm Ethos NPU can be combined effectively with a Cortex-M CPU in a microcontroller architecture. (Image credit: Arm)

Defining characteristics

The first requirement arises from the nature of AI’s compute operations. A RISC CPU such as an Arm Cortex-M core is suitable for the sequential, deterministic control functions that MCUs traditionally perform. But neural networking is an entirely different kind of operation, which calls for an entirely different kind of processor, a neural processing unit (NPU).

Since edge AI applications require both the control functions and the AI functions, the MCU must perform both, with a RISC CPU alongside an NPU on a single chip.

There is a broad choice of NPU IP available, including some manufacturers’ own proprietary IP and this is an important factor to consider. The fragmented nature of the embedded device industry means that many companies do not have the resources to train AI/ML developers to work with a proprietary hardware platform: they need to be able to call on a common pool of AI developers.

This mirrors the situation that embedded device manufacturers currently enjoy for development of control functions: because the MCU industry has standardised on the Arm M-Profile CPU architecture, embedded device OEMs can draw on a global pool of embedded system developers who are familiar with a common set of tools and development environments which are compatible with M-Profile CPUs.

In the field of AI/ML, it is already difficult enough to find talented developers, and the shortage could become more acute as more embedded OEMs start development of AI-enabled products. As with the CPU, so with the NPU the best solution is standardisation on a common architecture used by most MCU suppliers creating a large pool of engineers with shared knowledge of compatible AI model frameworks and algorithms e.g.TinyML and PyTorch.

Alif Semiconductor believes that the best candidate for a common NPU architecture for MCUs is the Ethos NPU supplied by Arm, an AI/ML architecture which dovetails perfectly with the Arm M-Profile on which Arm Cortex-M CPU cores are based.

Another feature of the embedded device industry is the diversity of its output, which shows wide variability in response to the variety of customer requirements. The opportunity to add value by building in AI/ML functionality extends the diversity yet further, with in many cases different AI models applied to low-end, mid-range and premium products.

This calls for an MCU platform which can scale from low-end to premium functionality. At the same time, time-to-market pressure means that OEMs need to re-use software across their range of products. A single architecture spanning an entire portfolio will allow for software re-use from the bottom to the top of an OEM’s range.

And if this scalable AI capability is to be applied at the edge, where devices such as personal health monitors and true wireless stereo earbuds are powered by a small battery, ultra-low power consumption in and out of ML mode is essential. In embedded applications, many of the AI/ML implementations will be handling sensor inputs in the audio (speech or sound) or video domains and will need to provide inferencing outputs in a few milliseconds while consuming a fraction of a millijoule of energy.

Traditional MCUs based on a RISC CPU core, even when it is running at maximum speed and power struggle to provide the required AI performance – anywhere in a range between 10 GOPS to 1 TOPS. At the same time, legacy MCU manufacturers are burdened with fragmented families of disparate MCUs frustrating users’ attempts to port software between them.

Embedded OEMs will also need substantially enhanced tool chains and model frameworks that more effectively lift AI development out of the realm of the specialist and make AI/ML development feasible for the mass of general embedded developers. Standardisation on the Ethos NPU platform will help foster development of this bigger and more user-friendly software development ecosystem.

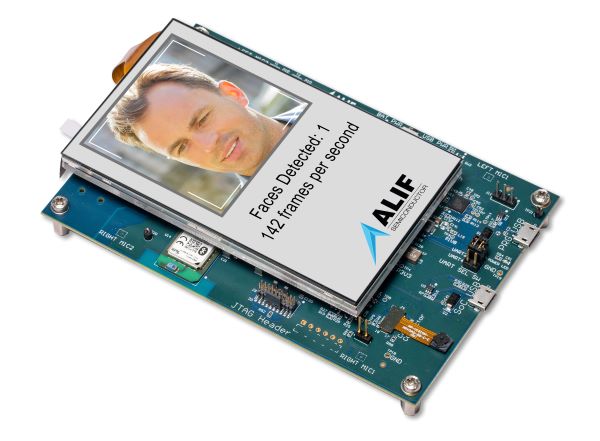

Above: The Ensemble Application Kit, AK-E7-AIML, includes the Ensemble E7 fusion processor, a camera, microphones, a motion sensor, and a colour display

A new answer?

Alif Semiconductor's Ensemble family of MCUs and fusion processors has an architecture built from the beginning to support AI/ML functionality in an Arm-based environment. It allows CPUs - Arm Cortex-M55 and Cortex-A32 cores - to co-exist on the same silicon with on-chip Ethos NPUs.

The family of devices stretches from the single-core E1 series MCUs up to the quad-core E7 series fusion processors and because all Ensemble devices share the same architecture, including an AXI bus for inter-processor communication and an advanced secure enclave, software is easily re-used across all devices. The wireless Balletto MCU family also adds Bluetooth connectivity to the Ensemble architecture.

These families incorporate standard Arm processor cores and NPUs, avoiding the use of closed, proprietary IP - a strategic choice made to enable OEM developers to take advantage of readily available frameworks for AI development. The Arm architecture is continually updated to maintain compatibility with the latest versions of all important AI software frameworks.

Because Ensemble and Balletto MCUs are AI-native devices, they run ML operations at ultra-low power. On standard AI performance benchmark tests, an Ensemble E1 MCU completes inferencing operations on its Ethos NPU as much as 100x faster than a typical MCU, while consuming as little as 1% of the power.

This appears to be the direction of travel of the MCU market: towards more AI capability at lower power, liberating embedded edge and battery-powered devices from their reliance on the cloud.

Author details: Reza Kazerounian is President, Alif Semiconductor