At the Linley Spring Processor Conference earlier this year, Arm showed how much processors designed for hard real-time control are changing. Traditionally, they have operated with limited memory. Even a gigabyte seems luxurious in the context of a 32bit processor intended to run the safety systems of a car or the motor controllers in an industrial robot. Arm's Cortex-R82 pushes way past that with the ability to address up to 1TB.

"It is something we thought was impossible just a few years ago," explained Arm senior IoT solutions manager Lidwine Martinot at the conference, noting that machine learning and analytics provide much of the driving force behind this sudden expansion in the amount of memory that manufacturers want to attach to real-time processors.

“There is an increasing need for this computation to be very close to the source of the data. Why send a whole image back to the servers when you have the ability to analyse the images locally?” Martinot said. “The next generation of autonomous vehicles are set to produce more than a terabyte daily. Today, a robot can already produce several gigabytes of data daily.”

Much of that memory will not be used by real-time tasks directly but by software running under Linux or Windows alongside, partly because of the ready availability of open-source development environments like PyTorch and Tensorflow and partly because they provide ready access to huge address spaces.

That’s been possible for some time first through specialised operating systems such as real-time versions of Linux, which made it possible to prioritise tasks with hard deadlines over less time-sensitive applications. That has largely given way to virtualisation and the use of separation kernels, such as those provided by Green Hills Software, Lynx Software Technologies and other suppliers.

Under this type of scheme all accesses to I/O ports or memory outside an operating systems’ address space result in the generation of an exception or trap that is intercepted by the separation kernel or hypervisor, which can choose to block, allow or modify the operation. Virtualisation of I/O and memory accesses in this way makes it difficult for one guest operating systems to interfere with the operation of another. This is vital for mixed-criticality systems where there may be one or more real-time engines that have control over machinery running alongside other modules that support communications with the cloud or human-machine interfaces.

The expectation is that machine-learning models will change on a regular basis. A major concern is that real-world conditions drift away from what the model is trained on. To avoid that the models will be regularly trained on new data and then distributed to the systems in the field.

Pavan Singh, vice president of product management at Lynx, said the real-time half will be treated differently. “It will use a more traditional development model: they won’t be updated as frequently and will have to meet formal security and safety rules.”

Containerisation

To help deliver these upgrades to the Linux portion, development teams are beginning to turn to techniques that are now commonplace in cloud computing. In that environment, a modified form of virtualisation has taken hold: containerisation. In the server space, the motivation for containers initially was performance.

The repeated context switches that result from each I/O access under virtualisation quickly takes a toll. Containers take advantage of security features built into Linux and similar operating systems that make it hard though not impossible for user-level software to make accesses outside of its own space and without incurring the overhead of frequent context switches.

A second advantage of the container for cloud users quickly emerged: the libraries and system-level functions that an application needs can be packaged together into one stored image and kept separate from other containers running on the same processor. As long as a server blade can run the binary, the container will function more or less anywhere it runs. That in turn led to the rise of orchestration, a management method where tools such as Google’s Kubernetes automatically load, run, move and destroy containers based on whatever rules the administrators have determined.

Work is no longer confined to specific servers. Instead, applications move around a data centre based on hardware availability and cost. The results of this trend are striking.

Analysis by security-monitoring specialist Sysdig found almost half of the containers deployed to the cloud last year ran for less than five minutes. Fewer than five per cent lasted more than a couple of weeks. Most would perform a task and once completed were taken down and stored ready for another job sometime in the future. In the meantime the computing resources could be used for other, probably equally short-lived containers.

However, Singh points out a key difference between the likely uses in embedded compared to those in the data-centre space, where the trend is towards so-called serverless computing: a deployment philosophy where the location of the software is largely immaterial. Kubernetes and similar tools do not yet understand location as being important but concerns such as latency make it important for edge-computing and embedded systems. It is not going to help maintain real-time behaviour if the orchestrator tries to deploy a container carrying an AI model several kilometres away from the robot the model is supposed to help. “In the short term, it will be a challenge to give the orchestrators the intelligence to say where applications are best deployed. In the long term it will evolve that way,” Singh says.

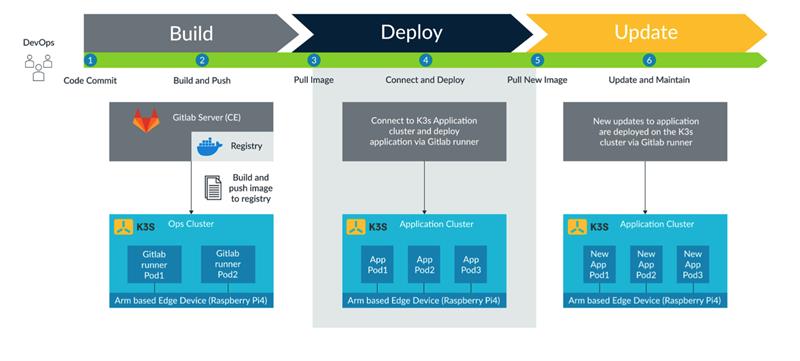

Figure 1: The K3s/GitLab CI/CDpipeline automatically moves changes from build to deploy and update

Application building

In practice, tools like Kubernetes will not be used to move containers automatically but used to make application building and deployment quicker and easier to perform than with traditional development environments. That is how Wind River senior director of product management Michel Chabroux sees its own sub-100Kbyte container designed to run directly under VxWorks, as well as the implementations for Linux, being used. “The idea is to leverage an existing standard to simplify the deployment of embedded applications,” he said, adding that Kubernetes will largely be used to get better visibility of connected systems rather than handling automated distribution and deployment.

Once in place, a containerised applications will likely live longer on the hardware than most of its cloud counterparts but may not be permanently active. Applications to perform predictive maintenance or analytics might run only when the core real-time tasks are not fully active. For example, in a robot, some of these containers may only run when it is idle or charging, taking cores that would be used for real-time vision when it is in operation on a shopfloor. This ability to change the roles of processor cores on the fly is one reason why Arm sees architectures such as the Cortex-R82 taking over from the traditional approach of mixing an A-series and R- or M-series processor on a PCB. The integration offered by nanometre-class processes makes it possible to consolidate many more workloads onto a single piece of silicon and having numerous identical cores able to run either real-time or server-class software maximises the silicon’s flexibility.

A key difference between embedded systems and cloud models lies in the container technology itself. The ones used in the cloud environment lack the security features that mixed-criticality systems need. To address the problem, Intel developed a concept it called Clear Containers, later renamed Kata. This approach uses hardware features in its x86 processors such as the SR-IOV hardware I/O virtualisation, to enforce a better level of isolation. The concept now lies at the heart of Project ACRN, which though has made attempts to port to Arm remains largely focused on the Intel ecosystem.

Arm has its own Project Cassini and a more hardware-independent project is LF Edge’s Eve, which is based on technology donated by start-up Zededa. Separation kernels can provide a higher level of security by enforcing full virtualisation at the cost of greater software-level intervention.

As these technologies mature, more hardware virtualisation support will likely wind up in real-time processors that sport large addressing ranges to reduce latency for the guest operating systems and containers.

At the same time, management features associated with containers will start to migrate even into the real-time portions.