One of the key components that comes from the cloud, and which has made its way into the SOAFEE architecture for software-defined vehicles promoted by Arm and its partners, is the container. The container concept is pushing at an open door, not least because embedded systems designers have already widely embraced its close relative and predecessor: virtualisation. Designers have adopted virtualisation for a variety of reasons, ranging from security and resilience to saving money by consolidating many functions onto a single SoC without demanding structural changes to existing software. The hypervisor provides each operating system and the applications it hosts with the illusion of having unfettered access to a processor’s I/O and memory map while preventing them from gaining access to the full machine.

For a good many applications, virtualisation remains a necessity and is often augmented by an isolation kernel such as Green Hill Software’s Integrity to prevent rogue tasks from taking control of the entire system or reading data from a secure area. The protection offered by virtualisation comes with overheads because it imposes a layer of indirection on every I/O transaction and on a good many operating-system services.

The container comes with reduced protection and isolation but also a lower overhead. But it is not a case of using one or the other. Cloud providers often use virtualisation on their servers to hold management tasks in one space and all the user modules in the other. This stops hackers using software they load into containers to take control of other applications running on the same node. But the operators still gain most of the performance advantages where memory and I/O requests lie mostly in the container space.

Containers need not be limited to Linux: Wind River is one vendor that added support to their own proprietary real-time operating systems. However, the features offered by containers are closely tied to the underlying operating system because they take advantage of its features. The Linux container uses a filesystem command to move the root folders into a tree sited in the container’s home directory. As long as the software uses standard Linux calls it cannot break out of this re-rooted tree and overwrite core operating system files.

One consequence of this ability to create many copies of the operating system libraries and host them in different parts of the filesystem is that it then becomes possible to have each container and the software inside it run with different versions of these support functions without affecting another container that may have different versions of those libraries.

Though applications cannot easily use shared memory to communicate with those in other containers directly, they can use network interfaces to send data to each other. Cloud developers have exploited this combination of features to build systems that are built out of so-called microservices. Each microservice runs in its own container, offering a specific set of functions.

The microservice architecture makes it easier to upgrade components and the libraries on which they depend. Because they sit in different containers there is less risk of changes affecting other components. It also makes it easier to develop code and test it, which is one major reason why the container is now the standard target for cloud deployment. That in cloud systems helped drive the shift to so-called serverless computing.

Serverless computing

Serverless is a bit of a misnomer. Servers have not gone away. You just don’t have dedicated servers for specific applications. Instead, orchestration software such as Kubernetes decides where to deploy and run containers. Kubernetes takes in requests for deployment and pushes the container on whichever node satisfies the rules the job comes with, ranging from simple availability to ability to meet performance targets. Once a job is finished, there is no need to keep the container running and incur bills from the cloud provider: they can just move the instance back into storage until they need it to run again.

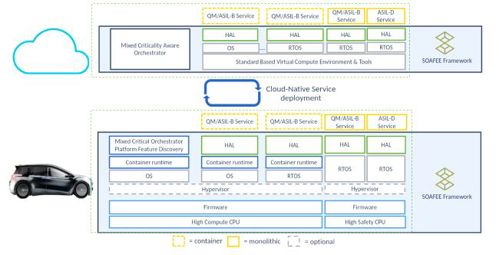

Above: The figure shows the containers within the architecture

When it comes to using serverless concepts in embedded systems, there is a tradeoff between guaranteeing response times and flexibility of resource usage. In the short term, this tradeoff may see the technique being used to support services that only need to run occasionally. Serverless containers might support predictive maintenance or run models to better schedule tasks among machine tools. But research points to serverless-like concepts possibly also moving into systems with hard real-time deadlines.

Václav Struhár and colleagues at Mälardalen University in Sweden and Austrian automotive specialist TTTech have proposed using a mixture of static and dynamic deployment to ensure real-time systems have a guaranteed baseline performance. The dynamic part uses profiling software to reallocate containers as their dynamic performance needs become clearer during runtime. For example, if containers are forcing important data out of caches regularly and suffer high cache-miss rates, that increases the risk of missed deadlines. Swapping containers that interact more cooperatively could improve performance without any changes to the application software.

Though those kinds of cache conflicts could be detected during prototyping for a good many systems, the increase in the use of machine learning and dynamically loaded containers may make these runtime migrations more important. Regular changes in the downloaded models may change the memory-usage profile enough to trigger unexpected interactions.

Business models may also make serverless concepts more enticing. One option is the function as a service (FaaS) model. This is where rent access to facilities such as more advanced control software instead of loading it permanently into a robot or machine tool. Siemens and other suppliers to this sector anticipate a move away from fixed-function machine tools to systems that use networked control and dynamically loaded software to handle changes in production techniques far more rapidly.

FaaS could be used to temporarily give the ability to a robot to handle a particular type of production job. When the robot returns to its regular role, orchestration software deletes the container.

Marcello Cinque, assistant professor of computer engineering at the University of Naples, sees active testing as another application in a scenario where comparisons with a digital twin fed on replicated data reveal a bug in one of a robot’s modules. The fix runs in parallel on a different node while the developers carry out checks before replacing the primary module.

Some researchers have proposed using the networking inherent to this structure to deliver fault tolerance without requiring each microservice to have an active hot standby running all the time. However, the open question is whether such a system can detect and load containers quickly enough to fill in for a failed node to support applications that have hard deadlines. This approach may be less important for functions that have hard deadlines but where their overall availability is important. This may suit inspection systems using advanced image analysis. The system might need to maintain high average throughput but still cope with minor delays as the load balancer finds a candidate machine to replace failed containers.

Luca Abeni and colleagues at the Sant’Anna School of Advanced Studies in Pisa and Ericsson Research, plan to develop a framework based on Kubernetes to support this kind of fault tolerance. Another team, at the University of Pisa, has focused more on energy management, powering down containers and moving them around based on demand to minimise overall power consumption: effectively a large-scale analogue of Arm’s big-Little architecture.

Once embedded software becomes more nomadic other ways to take advantage of the idea will doubtless appear.