In the year of publication, however, fully autonomous vehicles still seemed to be years away, and advanced driver-assistance system (ADAS) applications were much less common – in fact, the pace of disruptive change seemed glacial.

Fast forward to 2020 and things are very different. The rollout of 5G will have a significant influence, while the automotive-centric dedicated shortrange communications (DSRC) standard is now in production vehicles. With momentum growing, the deployment for DSRC to support ADAS features is set to expand. In that respect, features such as adaptive cruise control, automatic emergency braking and lane departure warnings are becoming commonplace enabling Level 2 autonomy in mainstream vehicles.

There is plenty of investment in developing autonomous vehicles by carmakers, car-sharing companies and cash-rich internet companies on a worldwide basis.

One of the four trends, the evolution of electric vehicles, is fundamental to these developments. Sales are growing, in part due to the improvements made in battery technology, and we are approaching an inflection point where the battery cost is coming down to a competitive level and at $100 per kWh, battery electric vehicles can be competitive with internal combustion engines.

Looking at these trends from the perspective of a semiconductor company, the complexity involved with developing ADAS and automated driving, as well as more established features such as infotainment, is now centred on a system-on-chip (SoC) approach.

The close integration of functions, made possible by SoCs based on the latest 7nm and 16nm processes, provides the performance needed to deliver these advanced features. In turn, this is having a disruptive effect on the way automotive SoCs are designed, accelerating the move to finer semiconductor process geometries.

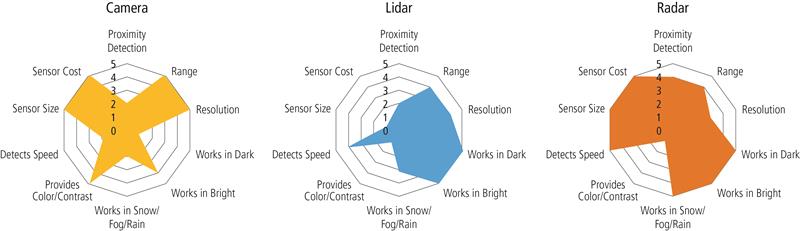

The main sensor most human drivers rely on is the eye. We can see in colour, perceive depth and distance, estimate speed and direction, and adjust to variable light conditions. If we apply these requirements to autonomous driving systems, it becomes clear just how big the task is. We are using image sensors, coupled to SoCs, to mimic the human experience. This involves synthesising information to make decisions, at the same speed and with the same accuracy as a human driver.

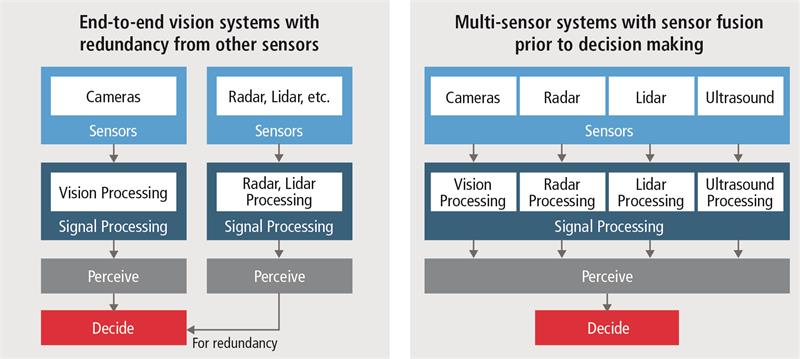

This will require more data than an image sensor can provide. Sensor fusion, based on multiple types of sensor technologies, distributed around the vehicle, will become the “eyes” of the autonomous driver. This sensor data will need to be processed, typically by a single SoC in order to reduce latency and avoid synchronicity issues.

The use of artificial intelligence (AI) to perform the data synthesis will also be essential, with the core intelligence also running on the SoC, rather than the cloud. These requirements are exerting extreme pressure on SoC designers.

Autonomous driving will require the vehicles to be capable of perceiving their environment. This means using sensors to monitor the road, other road users and the car itself. This “situational awareness” is essential to allow the vehicle to navigate safely, plan its ideal route and adapt its plans based on the prevailing and changeable conditions.

There are three main computational modalities that any autonomous system must use to achieve situational awareness: sensing, through image and signal processing; perceiving, using data analysis; and decision making, through the use of AI. All of these can only be executed through semiconductor technology and embedded software, namely the SoC.

SoCs for ADAS

Today Level 3 autonomy is available in factory models, and OEMs are working on the SoCs needed to deliver Level 4. It is not clear if the innovation will come from chips designed by the incumbent chip suppliers or from new entrants. As the sensor modality changes from cameras and radar (Level 2) to lidar, radar and ultrasonic sensors (Level 3 and Level 4), the sensor fusion element will become more complex.

Lidar has many benefits, but is relatively expensive and while radar technology is more mature and more cost effective for mainstream models, there is speculation around whether it has the scope to become good enough to avoid having to wait for lidar to mature and become commercially competitive.

Together, these sensors can address the primary demands of autonomy: distance detection, traffic signal recognition, lane detection, segmentation and mapping.

No single sensor technology can provide all three, of course; only image sensors can “see” traffic signals, for example, while only radar is effective in rain or fog. With rapid advancements in radar technology, we may witness the deployment of next-generation imaging radar that can approach the capabilities of lidar at a fraction of the cost and reduce the amount of lidar needed in Level 3 and Level 4 autonomous vehicles.

There is some variation in the types of radar technologies currently being used today. Short-range radar works well for object detection when moving slowly in parking situations, medium-range for detecting other vehicles in adjacent lanes, and long-range for detecting vehicles and other objects moving at speed.

Employing multiple types of radar technologies puts greater emphasis on the need for sensor fusion, with the bulk of the data processing performed by a central processor. This means the SoC will need to process the data from the specific sensors when the sensing requirements change.

This move towards centralised sensor fusion means that an ADAS SoC will really be a network on a chip, following a heterogeneous SoC architecture based on a central communications highway that connects functional blocks. These blocks will typically, but not exclusively, include image processing, radar, lidar, navigation and high-performance computing. Increasingly these will all be augmented using some form of AI.

Above: Examples of SoC architectures designed for autonomous driving applications

Above: Examples of SoC architectures designed for autonomous driving applications

The horsepower will be provided by a combination of DSPs - such as the Tensilica Vision, Fusion and ConnX processors - along with multicore CPUs and, more recently, dedicated neural network processors, such as the Tensilica DNA processor family for on-device AI. In order to feed these processing cores, high-speed interfaces will also be needed.

The SoCs being developed today use LPDDR4 at 4266Mbps speeds, but, to lower system power, designers are moving to LPDDR4X, which uses lower voltages but offers the same speed. Future designs will use LPDDR5 when the price is right, but DDR4/5 with GDDR6 will be used to meet the needs of AI acceleration. MIPI is expected to remain the interface of choice for cameras, and there is some speculation about whether MIPI A-PHY will provide the interconnection for the many sensors needed.

To support the relatively long distances that the data must travel around a vehicle's network, the use of GbE is expected to increase.

For storage needs, designers rely on standard flash interfaces, such as eMMC, SD and UFS. Of course, in order to be deployed in automotive applications, the underlying IP used needs to be compliant with AEC-Q100 and ISO 26262:2018.

Conclusion

As the electronic content of vehicles has increased, the semiconductor industry has responded, with integrated devices based on the most appropriate process. Today, the demand for high-performance processing is influencing the industry to migrate from 28nm processes to achieve acceptable performance levels.

With the wider adoption of ADAS and the demands it brings, semiconductor manufacturers are now looking towards the very latest processes; 16nm, or even 7nm, processes are needed in order to enable the latest features.

There is now a clearer roadmap leading to Level 5 autonomy, even if that destination is still some way off. Using sensors to mimic a human driver comes with its challenges; AI is easing some of those challenges, but the underlying technology will still rely heavily on semiconductor technology that will need to work reliably for 10 or more years.

Author details: Thomas Wong, Director of Marketing, Automotive Segment, Design IP Group, Cadence