It all comes down to design complexity. As many SoC designs pass the 100million gate mark, they also integrate large numbers of cores – symmetric and asymmetric, heterogeneous and homogeneous – as well as a range of IP. If that wasn't enough to deal with, those designs now take advantage of schemes which vary the supply voltage or even shut blocks down when they aren't required.

How can designers make sure the cores work together as intended? How can they ensure the SoC runs correctly at all supply voltages and how can they investigate those 'corner cases' to ensure that performance remains unaffected when 'this, this and this' happens?

Representing more than 70% of the cost of developing an SoC, verification is seen to be the critical factor in getting products to market on time. Recognising this, EDA companies have had verification firmly in their sights for some years, but some of the leaders have recently made strategic acquisitions to boost their capabilities. Cadence, for example, spent $170million in 2014 to acquire Jasper Design Automation for its formal analysis technology.

Cadence isn't just focused on software; in 2013, it launched the Palladium XP II Verification Computing Platform with the aim of speeding hardware and software verification 'significantly'.

In an attempt to provide some idea of the verification problem, Frank Schirrmeister, director of product management and marketing in Cadence's System and Verification Group, said it was like navigating through London's traffic on a bad day.

"You need to verify that, for example, an SoC in a phone can decode video, transmit and display it. If you translate that into what's happening with traffic, it's like having to know which streets are one way, which is the best route to take at which time of day and so on.

"It's the same in the chip world.which is the best route to take at which time of day and so on.

"It's the same in the chip world. Your SoC may need to use compute resources to decode a video to a particular resolution, but those resources may not be available when needed. The problem becomes complex."

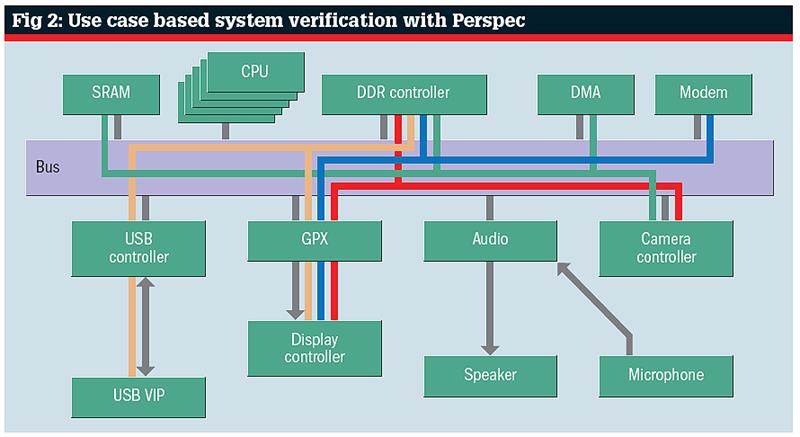

Cadence's latest contribution to solving the verification challenge comes in the shape of the Perspec System Verifier platform. According to the company, Perspec can help to improve SoC quality by accelerating the development of complex software driven tests to find and fix SoC level bugs.

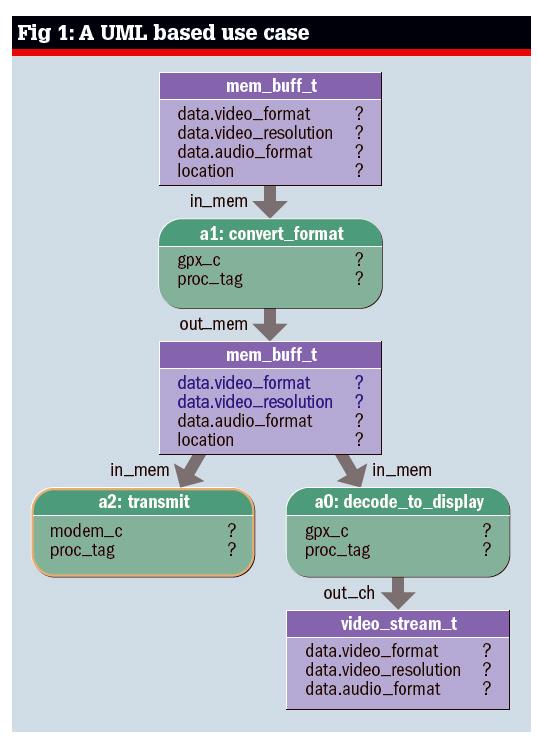

Amongst its features are: a Unified Modeling Language (UML) based view of system level actions and resources that allows an intuitive view to be created of hard to test system level interactions; solver technology to automate the generation of portable tests; and tests that run on pre silicon verification platforms, including simulation, acceleration and emulation, and virtual and FPGA prototyping.

Schirrmeister described Perspec as the latest stage of an evolution in verification technology. "We have been seeing shifts in verification from the brute force approach of directed testing – the 'stone age' of verification – to hardware verification logic to metric driven verification. What we're doing with Perspec is adding scenarios and software driven verification to give a top down view."

In Schirrmeister's opinion, engineers in the 'stone age' applied ad hoc testbenches and hand written tests. As time moved on, the industry moved to coverage driven verification, with a constrained random stimulus approach automating test creation. Today, SoC verification is software driven, with 'top down' use case testing, automatic generation of software tests and the ability to reuse those tests across a range of platforms.

Despite this progress, gaps still remain, says Cadence. These include:

- How to develop and debug the tests

- How to measure what has been tested?

- How to reuse tests developed for IP at the system level?

- How to develop tests that work on all validation platforms?

- What tests to develop?

Perspec System Verifier is said to meet these requirements through abstraction, automation, platforms, measurement and leverage. Abstraction allows the designer to create use cases via a UML style diagram, with automatic generation of complex tests that, says Cadence, couldn't be written manually. Platforms supports tests on a range of pre and post silicon platforms at that platform's speed, whilst measurement provides data on coverage, flows and dependencies. Finally, leverage allows use cases to be shared.

Use cases allow complex tasks to be stated simply. One example provided by Cadence is a use case for a mobile phone: 'view a video while uploading it'. Translating this to the system level, the task becomes 'take a video buffer and convert it to MPEG4 format with medium resolution using any available graphics processor. Then transmit the result through the modem via any available communications processor and, in parallel, decode it using any available graphics processor and display the video stream on any of the SoC displays supporting the resulting resolution'.

Schirrmeister noted: "Perspec asks for abstract descriptions in C to test these scenarios. There's a constraint solving element and the output is a set of tests which can run on range of platforms, including FPGA based verification.

"It's driving verification into a new area," he concluded, "but it's also adding to existing methods, which won't go away."

Swarming to solve the problem A US academic plans to harness swarm intelligence based on the behaviour of ants and apply it to verification. Michael Hsiao, professor of electrical and computer engineering at Virginia Tech, has been conducting long term research into algorithms that simulate the methods used by ant colonies to find the most efficient route to food sources.This method uses an automatic stimuli generator to create a database of possible vectors, which are then populated by a swarm of intelligent agents. Like ants, these agents deposit a pheromone along their paths that attract other agents. The pheromone 'evaporates' over time, reinforcing the most efficient pathways. "In this regard," he says, "the proposed swarm-intelligent framework emphasises the effective modelling and learning from collective effort by extracting the intelligence acquired during the search over multiple abstract models. "The simulation loops through multiple runs. The branches with the highest fitness values are removed so the system can focus on the rarely visited branches – a way of finding and testing 'hard corners'. In a benchmark test validating a particular circuit, this method achieved nearly 97% coverage in 11.95s, compared to 91% coverage in 327s for a deterministic approach. Prof Hsiao hopes the work will lead to a deeper understanding of the validation of large, complex designs and help cut the overall cost of the design process. "The success of this project not only will push the envelope on design validation, but will also offer new stimuli generation methods to related areas, such as post-silicon validation and validating trust of hardware," he concluded. |